The Pointy End of Camera Matching

Hello everyone. My name’s Stuart Attenborrow, and welcome to the semi-regular update on camera matching!

This topic has been covered extensively in the past so I’ll try not to repeat what you already know. However, for those that are new to the project: camera matching is a term we use to describe a range of techniques that all involve using the original in-game stills to position objects in 3D space. We do this to ensure we preserve the scale, position and shape of every element in the game, and it is the most time consuming part of the process. Unfortunately it’s also the first stage of the process, thus creating an immediate bottleneck when we start work on a new area.

Luckily, this year has seen a fundamental shift in the way we’re approaching camera matching. If we were to continue matching each frame by hand, the project would likely never see the light of day. And so we’ve changed tack. Again.

A few of our supporters have offered great suggestions regarding ways of automating the process, including a topic known as photogrammetry. This involves taking lots of photos of an object and using software to generate a 3D model complete with textures. Some examples of commercial software that can achieve this task include: Autodesk ReMake, Agisoft PhotoScan, Reality Capture, or something a bit more obscure like VisualSFM.

It’s never as easy as it sounds. The biggest problem we have with automating the matching process is that it requires a lot of source imagery to work. Riven might seem like it has a lot of images, but most of the time they’re too far apart, too dark, too compressed, or just plain too small to be of any use. Another small but not insignificant issue is that some areas in the game defy the laws of physics. An interior may end up being larger than is physically possible from its exterior.

This leaves us with a couple of options. Either we announce the end of Starry Expanse and our crushing defeat in the face of insurmountable odds, or we put our thinking caps on. We preferred the latter. To explain what we’re doing now, let’s first go over a brief explanation of what photogrammetry is all about.

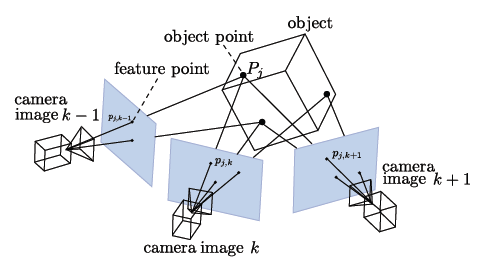

There are many stages to a photogrammetry pipeline. The first is feature detection, where contrasty or unique shapes are determined in each image. The second is feature matching, where each image is compared to every other image to determine common features. Next is the sparse reconstruction stage, where 2D becomes 3D. This results in a number of points in 3D space representing the subject, and a number of camera positions that represent where each image was taken. Assuming each stage completed successfully, a final stage can be performed where the sparse reconstruction is used to generate a dense point cloud of the subject. In short, there’s a ton of math involved, created by some very intelligent people (thanks everyone!).

The images in Riven usually fall down at the sparse reconstruction stage. There aren’t enough matching features found in enough different images for an automated photogrammetry pipeline to rebuild the 3D scene to a good standard (or at all). To solve this issue, we’re manually performing the feature detection and matching stages ourselves. Whilst there are a lot less matching features between images, the quality of the matches are far better. This means the reconstruction stage is successful, we get a 3D scene of a few key points, and most importantly all of the original camera positions!

It sounds like a lot of work (and it still is), but it’s an order of magnitude less work than the previous method. Plus it results in a much more accurate outcome. At this point we block out the scene using a simple mesh (you may be familiar with the greyscale landscapes we’ve shared previously). We use the key points as guides, and each camera to align the geometry. This can be handed off to the modelers to create the final assets.

My involvement so far has focused on matching the full motion videos. On the upside, there’s a lot of frames in each video to match, but on the downside the videos are heavily compressed. For the following maglev ride I’ve had to match both directions and merge the result to ensure the location of the supports and docks are accurate.

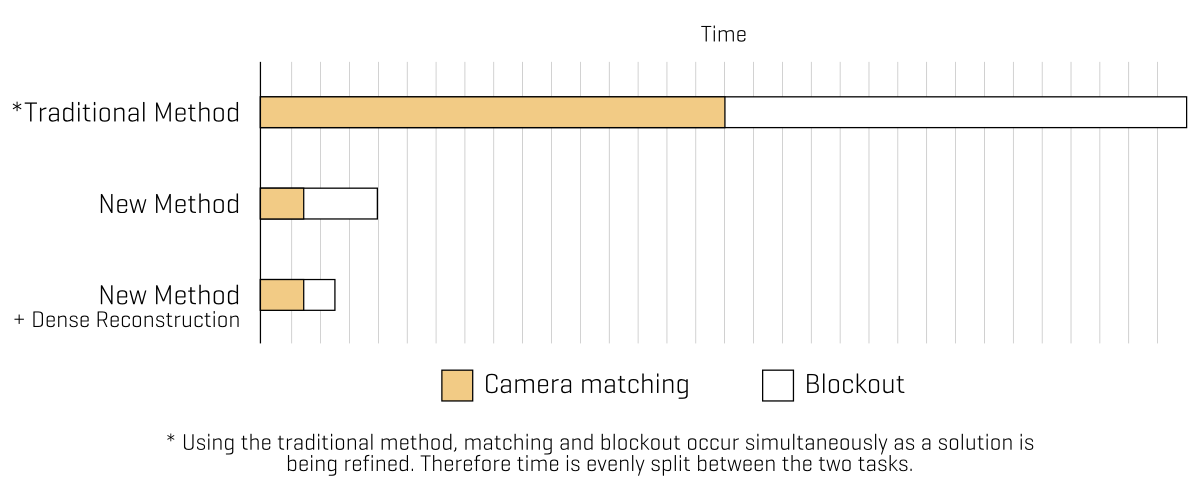

In addition to this, I’ve been working on a way of incorporating a dense point cloud reconstruction process into our pipeline. Since we’re manually matching the images we haven’t been able to use the same methods employed by the software mentioned above. Preliminary results are very promising. This makes the blockout process semi-automated and greatly reduces the time spent creating the initial geometry. Plus we get textured meshes from the match!

To put this task in perspective I’ve put together a little graph. I think the results speak for themselves.

With the camera matching process taking a huge leap forward, our modeling team won’t know what hit them. Expect more great things to come. If you’d like to hear more about what we’re doing, have any questions for us, or if you just feel like a chat, head on over to our new discord server!

Finally, a big welcome to our newest member Cameron Craig, who’ll be assisting with the creation of our all-important characters.

April 18th, 2018 at 4:39 am

Still impressed by the huge work you do, peoples. And this new approach seem to be very efficient ! :thumbs up:

April 18th, 2018 at 5:53 am

Thanks for the huge information. You’ve done a big step.:)

April 18th, 2018 at 6:09 am

Fantastic work, I love it! 😀

Up next: realRiven Masterpiece Edition, this time remade using Voxels.

April 18th, 2018 at 6:32 am

I absolutely LOVE technical behind-the-scenes stuff like this. Great work guys!

April 18th, 2018 at 11:15 am

You are doing a great job guys … and with this new technique you will save work

April 18th, 2018 at 12:25 pm

Was it quite a shock that Cyan is re-releasing Riven this year? Are you in touch with them – perhaps they are retouching assets and you can benefit from this knowledge, or perhaps Starry Expanse is going to be part of the 25th anniversary package? 😉

April 21st, 2018 at 1:03 am

I didn’t know Cyan was going to do that until I read your post. I’m very hyped about it!

It would be nice if realRiven would be part of it, but I doubt it will be ready by then. Maybe it could be added for free for those who bought the full package whenever it is finished?

Cyan knows this project is happening, the Starry Expanse Project informed them many years ago on a Mysterium convention, and there is some kind of contract where Cyan gives them some help while the Project can’t tell anyone what kind of help they are given. It could be old 3D models used to create the original game or the used textures or something like that, but it’s all just a guess. There’s a post about this on one of the older pages of the site.

April 22nd, 2018 at 11:16 am

Unfortunately, they are not touching the graphics or sounds. They are simply making all the Myst games available and compatible with Windows 10. Since Riven has already been available for years, so not much is changing on that front.

It will be the first time Exile and Revelations will be available, though, so I’m excited for that. Plus the pure awesomeness of the box set they are building for the kickstarter.

April 18th, 2018 at 1:43 pm

This is a really cool read, thanks to all of the people on the team who keep us updated on this project! Design on video games is well beyond me lol so it is cool getting a breakdown of how to do something like this

I was always on board and excited about the project, but especially ever since the showing of Age 233 from you guys, it blew my mind and I have always been excited to see these updates since 🙂 Keep up the great work! We all greatly appreciate it!

April 18th, 2018 at 9:25 pm

Very cool to see photogrammetry used to 3D reconstruct one of my favorite games. I’m currently using it at work to reconstruct urban areas from satellite imagery. It’s a tough process to get right and definitely one that’s still being heavily researched.

April 19th, 2018 at 9:42 am

What software do you use for this method, and is it open source?

April 19th, 2018 at 2:07 pm

Ok, so you have this fancy new method of camera matching that’s going to save you tons of time. Do you only use this on areas that you haven’t done that with? Or do you have to go back to square one and re-match everything?

April 19th, 2018 at 2:42 pm

The priority is to match new areas first so we end up with 100% coverage. Then go back and redo areas where the old match was problematic.

April 19th, 2018 at 8:29 pm

That would depend whether or not there is a noticeable difference in the final result. If it’s just saving time without altering the results, redoing the work would be counterproductive.

So, dev team: is it altering the results such that you’d have to redo work that you’ve already done?

September 24th, 2018 at 9:35 am

I’ve been reconstructing bits of another oldie, “Drowned God: Conspiracy Of The Ages” published by Inscape in 96. My attempt at making a conceptual sequel for self-gratification. Don’t have much to go on other than that I was told it was built in Macromedia 5. Been struggling with my current camera matching. Figuring out FOV in particular has been a real pain.